Aviso: Este post foi traduzido para o português usando um modelo de tradução automática. Por favor, me avise se encontrar algum erro.

Accelerate é uma biblioteca da Hugging Face que permite executar o mesmo código PyTorch em qualquer configuração distribuída adicionando apenas quatro linhas de código.

Instalação

Para instalar accelerate com pip, simplesmente execute:

pip install accelerateE com conda:

conda install -c conda-forge accelerateConfiguração

Em cada ambiente em que o accelerate seja instalado, a primeira coisa que precisa ser feita é configurá-lo. Para isso, executamos no terminal:

`accelerate config`!accelerate configCopied

--------------------------------------------------------------------------------In which compute environment are you running?This machine--------------------------------------------------------------------------------multi-GPUHow many different machines will you use (use more than 1 for multi-node training)? [1]: 1Should distributed operations be checked while running for errors? This can avoid timeout issues but will be slower. [yes/NO]: noDo you wish to optimize your script with torch dynamo?[yes/NO]:noDo you want to use DeepSpeed? [yes/NO]: noDo you want to use FullyShardedDataParallel? [yes/NO]: noDo you want to use Megatron-LM ? [yes/NO]: noHow many GPU(s) should be used for distributed training? [1]:2What GPU(s) (by id) should be used for training on this machine as a comma-seperated list? [all]:0,1--------------------------------------------------------------------------------Do you wish to use FP16 or BF16 (mixed precision)?noaccelerate configuration saved at ~/.cache/huggingface/accelerate/default_config.yaml

No entendi completamente sua solicitação. Vou traduzir o texto fornecido para português, mantendo a estrutura e estilo do markdown original:

Em meu caso, as respostas foram

- Em qual ambiente de computação você está executando?

- [x] "Esta máquina"

- [_] "AWS (Amazon SageMaker)"

Quero configurá-lo no meu computador

- Qual tipo de máquina você está usando?

- [_] multi-CPU

- [_] multi-XPU

- [x] multi-GPU

- [_] multi-NPU

- [_] TPU

Como tenho 2 GPUs e quero executar códigos distribuídos entre elas, escolho

multi-GPU

- Quantas máquinas diferentes você usará (use mais de 1 para treinamento multinó)? [1]:

- 1

Escolho

1porque só vou executar no meu computador

- Deve-se verificar operações distribuídas em execução para erros? Isso pode evitar problemas de tempo limite, mas será mais lento. [sim/NÃO]:

- não

Com essa opção, pode-se escolher que o

accelerateverifique erros durante a execução, mas isso faria com que fosse mais lento, então escolhono, e em caso de erros mudo parayes.

- Deseja otimizar seu script com torch dynamo? [sim/NÃO]:

- não

- Você quer usar FullyShardedDataParallel? [sim/NÃO]:

- não

- Você quer usar o Megatron-LM? [sim/NÃO]:

- não

- Quantas GPUs devem ser usadas para treinamento distribuído? [1]:

- 2

Escolho

2porque tenho 2 GPUs

- Quais GPUs (por ID) devem ser usadas para treinamento nesta máquina como uma lista separada por vírgulas? [todas]:

- 0,1

Escolho

0,1porque quero usar as duas GPUs

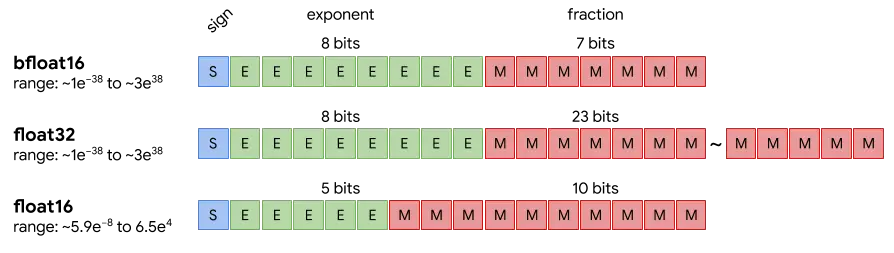

- Você deseja usar FP16 ou BF16 (precisão mista)?

- [x] não

- [_] fp16

- [_] bf16

- [_] fp8

Por enquanto escolho

no, pois para simplificar o código quando não usoaceleratevamos treinar em fp32, mas o ideal seria usar fp16

A configuração será salva em ~/.cache/huggingface/accelerate/default_config.yaml e pode ser modificada a qualquer momento. Vamos ver o que há dentro.

!cat ~/.cache/huggingface/accelerate/default_config.yamlCopied

compute_environment: LOCAL_MACHINEdebug: falsedistributed_type: MULTI_GPUdowncast_bf16: 'no'gpu_ids: 0,1machine_rank: 0main_training_function: mainmixed_precision: fp16num_machines: 1num_processes: 2rdzv_backend: staticsame_network: truetpu_env: []tpu_use_cluster: falsetpu_use_sudo: falseuse_cpu: false

Outra forma de ver a configuração que temos é executando em um terminal:

acelerar env!accelerate envCopied

Copy-and-paste the text below in your GitHub issue- `Accelerate` version: 0.28.0- Platform: Linux-5.15.0-105-generic-x86_64-with-glibc2.31- Python version: 3.11.8- Numpy version: 1.26.4- PyTorch version (GPU?): 2.2.1+cu121 (True)- PyTorch XPU available: False- PyTorch NPU available: False- System RAM: 31.24 GB- GPU type: NVIDIA GeForce RTX 3090- `Accelerate` default config:- compute_environment: LOCAL_MACHINE- distributed_type: MULTI_GPU- mixed_precision: fp16- use_cpu: False- debug: False- num_processes: 2- machine_rank: 0- num_machines: 1- gpu_ids: 0,1- rdzv_backend: static- same_network: True- main_training_function: main- downcast_bf16: no- tpu_use_cluster: False- tpu_use_sudo: False- tpu_env: []

Uma vez que configuramos o accelerate, podemos testar se fizemos tudo corretamente executando no terminal:

acelerar teste!accelerate testCopied

Running: accelerate-launch ~/miniconda3/envs/nlp/lib/python3.11/site-packages/accelerate/test_utils/scripts/test_script.pystdout: **Initialization**stdout: Testing, testing. 1, 2, 3.stdout: Distributed environment: DistributedType.MULTI_GPU Backend: ncclstdout: Num processes: 2stdout: Process index: 0stdout: Local process index: 0stdout: Device: cuda:0stdout:stdout: Mixed precision type: fp16stdout:stdout: Distributed environment: DistributedType.MULTI_GPU Backend: ncclstdout: Num processes: 2stdout: Process index: 1stdout: Local process index: 1stdout: Device: cuda:1stdout:stdout: Mixed precision type: fp16stdout:stdout:stdout: **Test process execution**stdout:stdout: **Test split between processes as a list**stdout:stdout: **Test split between processes as a dict**stdout:stdout: **Test split between processes as a tensor**stdout:stdout: **Test random number generator synchronization**stdout: All rng are properly synched.stdout:stdout: **DataLoader integration test**stdout: 0 1 tensor([ 0, 1, 2, 3, 4, 5, 6, 7, 8, 9, 10, 11, 12, 13, 14, 15, 16, 17,stdout: 18, 19, 20, 21, 22, 23, 24, 25, 26, 27, 28, 29, 30, 31, 32, 33, 34, 35,stdout: 36, 37, 38, 39, 40, 41, 42, 43, 44, 45, 46, 47, 48, 49, 50, 51, 52, 53,stdout: 54, 55, 56, 57, 58, 59, 60, 61, 62, 63], device='cuda:1') <class 'accelerate.data_loader.DataLoaderShard'>stdout: tensor([ 0, 1, 2, 3, 4, 5, 6, 7, 8, 9, 10, 11, 12, 13, 14, 15, 16, 17,stdout: 18, 19, 20, 21, 22, 23, 24, 25, 26, 27, 28, 29, 30, 31, 32, 33, 34, 35,stdout: 36, 37, 38, 39, 40, 41, 42, 43, 44, 45, 46, 47, 48, 49, 50, 51, 52, 53,stdout: 54, 55, 56, 57, 58, 59, 60, 61, 62, 63], device='cuda:0') <class 'accelerate.data_loader.DataLoaderShard'>stdout: Non-shuffled dataloader passing.stdout: Shuffled dataloader passing.stdout: Non-shuffled central dataloader passing.stdout: Shuffled central dataloader passing.stdout:stdout: **Training integration test**stdout: Model dtype: torch.float32, torch.float32. Input dtype: torch.float32stdout: Model dtype: torch.float32, torch.float32. Input dtype: torch.float32stdout: Model dtype: torch.float32, torch.float32. Input dtype: torch.float32stdout: Model dtype: torch.float32, torch.float32. Input dtype: torch.float32stdout: Training yielded the same results on one CPU or distributed setup with no batch split.stdout: Model dtype: torch.float32, torch.float32. Input dtype: torch.float32stdout: Model dtype: torch.float32, torch.float32. Input dtype: torch.float32stdout: Training yielded the same results on one CPU or distributes setup with batch split.stdout: FP16 training check.stdout: FP16 training check.stdout: Model dtype: torch.float32, torch.float32. Input dtype: torch.float32stdout: Model dtype: torch.float32, torch.float32. Input dtype: torch.float32stdout: Keep fp32 wrapper check.stdout: Keep fp32 wrapper check.stdout: BF16 training check.stdout: BF16 training check.stdout: Model dtype: torch.float32, torch.float32. Input dtype: torch.float32stdout: Model dtype: torch.float32, torch.float32. Input dtype: torch.float32stdout: Model dtype: torch.float32, torch.float32. Input dtype: torch.float32stdout: Model dtype: torch.float32, torch.float32. Input dtype: torch.float32stdout: Model dtype: torch.float32, torch.float32. Input dtype: torch.float32Model dtype: torch.float32, torch.float32. Input dtype: torch.float32stdout:stdout: Training yielded the same results on one CPU or distributed setup with no batch split.stdout: Model dtype: torch.float32, torch.float32. Input dtype: torch.float32stdout: Model dtype: torch.float32, torch.float32. Input dtype: torch.float32stdout: FP16 training check.stdout: Training yielded the same results on one CPU or distributes setup with batch split.stdout: FP16 training check.stdout: Model dtype: torch.float32, torch.float32. Input dtype: torch.float32stdout: Model dtype: torch.float32, torch.float32. Input dtype: torch.float32stdout: Keep fp32 wrapper check.stdout: Keep fp32 wrapper check.stdout: BF16 training check.stdout: BF16 training check.stdout: Model dtype: torch.float32, torch.float32. Input dtype: torch.float32stdout: Model dtype: torch.float32, torch.float32. Input dtype: torch.float32stdout:stdout: **Breakpoint trigger test**Test is a success! You are ready for your distributed training!

Vemos que termina dizendo Test is a success! You are ready for your distributed training! por isso tudo está correto.

Treinamento

Otimização do treinamento

Código base

Vamos a fazer primeiro um código de treinamento básico e depois otimizá-lo para ver como é feito e como melhora.

Primeiro vamos buscar um dataset. No meu caso, vou usar o dataset tweet_eval, que é um dataset de classificação de tweets. Especificamente, vou baixar o subset emoji, que classifica os tweets com emoticons.

from datasets import load_datasetdataset = load_dataset("tweet_eval", "emoji")datasetCopied

DatasetDict({train: Dataset({features: ['text', 'label'],num_rows: 45000})test: Dataset({features: ['text', 'label'],num_rows: 50000})validation: Dataset({features: ['text', 'label'],num_rows: 5000})})

dataset["train"].infoCopied

DatasetInfo(description='', citation='', homepage='', license='', features={'text': Value(dtype='string', id=None), 'label': ClassLabel(names=['❤', '😍', '😂', '💕', '🔥', '😊', '😎', '✨', '💙', '😘', '📷', '🇺🇸', '☀', '💜', '😉', '💯', '😁', '🎄', '📸', '😜'], id=None)}, post_processed=None, supervised_keys=None, task_templates=None, builder_name='parquet', dataset_name='tweet_eval', config_name='emoji', version=0.0.0, splits={'train': SplitInfo(name='train', num_bytes=3808792, num_examples=45000, shard_lengths=None, dataset_name='tweet_eval'), 'test': SplitInfo(name='test', num_bytes=4262151, num_examples=50000, shard_lengths=None, dataset_name='tweet_eval'), 'validation': SplitInfo(name='validation', num_bytes=396704, num_examples=5000, shard_lengths=None, dataset_name='tweet_eval')}, download_checksums={'hf://datasets/tweet_eval@b3a375baf0f409c77e6bc7aa35102b7b3534f8be/emoji/train-00000-of-00001.parquet': {'num_bytes': 2609973, 'checksum': None}, 'hf://datasets/tweet_eval@b3a375baf0f409c77e6bc7aa35102b7b3534f8be/emoji/test-00000-of-00001.parquet': {'num_bytes': 3047341, 'checksum': None}, 'hf://datasets/tweet_eval@b3a375baf0f409c77e6bc7aa35102b7b3534f8be/emoji/validation-00000-of-00001.parquet': {'num_bytes': 281994, 'checksum': None}}, download_size=5939308, post_processing_size=None, dataset_size=8467647, size_in_bytes=14406955)

Vamos ver as classes

print(dataset["train"].info.features["label"].names)Copied

['❤', '😍', '😂', '💕', '🔥', '😊', '😎', '✨', '💙', '😘', '📷', '🇺🇸', '☀', '💜', '😉', '💯', '😁', '🎄', '📸', '😜']

E o número de classes

num_classes = len(dataset["train"].info.features["label"].names)num_classesCopied

20

Vemos que o conjunto de dados tem 20 classes

Vamos a ver a sequência máxima de cada split

max_len_train = 0max_len_val = 0max_len_test = 0split = "train"for i in range(len(dataset[split])):len_i = len(dataset[split][i]["text"])if len_i > max_len_train:max_len_train = len_isplit = "validation"for i in range(len(dataset[split])):len_i = len(dataset[split][i]["text"])if len_i > max_len_val:max_len_val = len_isplit = "test"for i in range(len(dataset[split])):len_i = len(dataset[split][i]["text"])if len_i > max_len_test:max_len_test = len_imax_len_train, max_len_val, max_len_testCopied

(142, 139, 167)

Então definimos a sequência máxima em geral como 130 para a tokenização

max_len = 130Copied

A nós interessa o conjunto de dados tokenizado, não as sequências brutas, então criamos um tokenizador

from transformers import AutoTokenizercheckpoints = "cardiffnlp/twitter-roberta-base-irony"tokenizer = AutoTokenizer.from_pretrained(checkpoints)Copied

Criamos uma função de tokenização

def tokenize_function(dataset):return tokenizer(dataset["text"], max_length=max_len, padding="max_length", truncation=True, return_tensors="pt")Copied

E agora tokenizamos o dataset

tokenized_dataset = {"train": dataset["train"].map(tokenize_function, batched=True, remove_columns=["text"]),"validation": dataset["validation"].map(tokenize_function, batched=True, remove_columns=["text"]),"test": dataset["test"].map(tokenize_function, batched=True, remove_columns=["text"]),}Copied

Map: 0%| | 0/45000 [00:00<?, ? examples/s]

Map: 0%| | 0/5000 [00:00<?, ? examples/s]

Map: 0%| | 0/50000 [00:00<?, ? examples/s]

Como vemos, agora temos os tokens (input_ids) e as máscaras de atenção (attention_mask), mas vamos ver que tipo de dados temos.

type(tokenized_dataset["train"][0]["input_ids"]), type(tokenized_dataset["train"][0]["attention_mask"]), type(tokenized_dataset["train"][0]["label"])Copied

(list, list, int)

tokenized_dataset["train"].set_format(type="torch", columns=['input_ids', 'attention_mask', 'label'])tokenized_dataset["validation"].set_format(type="torch", columns=['label', 'input_ids', 'attention_mask'])tokenized_dataset["test"].set_format(type="torch", columns=['label', 'input_ids', 'attention_mask'])type(tokenized_dataset["train"][0]["label"]), type(tokenized_dataset["train"][0]["input_ids"]), type(tokenized_dataset["train"][0]["attention_mask"])Copied

(torch.Tensor, torch.Tensor, torch.Tensor)

Criamos um DataLoader

import torchfrom torch.utils.data import DataLoaderBS = 64dataloader = {"train": DataLoader(tokenized_dataset["train"], batch_size=BS, shuffle=True),"validation": DataLoader(tokenized_dataset["validation"], batch_size=BS, shuffle=True),"test": DataLoader(tokenized_dataset["test"], batch_size=BS, shuffle=True),}Copied

Carregamos o modelo

from transformers import AutoModelForSequenceClassificationmodel = AutoModelForSequenceClassification.from_pretrained(checkpoints)Copied

Vamos a ver como é o modelo

modelCopied

RobertaForSequenceClassification((roberta): RobertaModel((embeddings): RobertaEmbeddings((word_embeddings): Embedding(50265, 768, padding_idx=1)(position_embeddings): Embedding(514, 768, padding_idx=1)(token_type_embeddings): Embedding(1, 768)(LayerNorm): LayerNorm((768,), eps=1e-05, elementwise_affine=True)(dropout): Dropout(p=0.1, inplace=False))(encoder): RobertaEncoder((layer): ModuleList((0-11): 12 x RobertaLayer((attention): RobertaAttention((self): RobertaSelfAttention((query): Linear(in_features=768, out_features=768, bias=True)(key): Linear(in_features=768, out_features=768, bias=True)(value): Linear(in_features=768, out_features=768, bias=True)(dropout): Dropout(p=0.1, inplace=False))(output): RobertaSelfOutput((dense): Linear(in_features=768, out_features=768, bias=True)(LayerNorm): LayerNorm((768,), eps=1e-05, elementwise_affine=True)(dropout): Dropout(p=0.1, inplace=False)))(intermediate): RobertaIntermediate((dense): Linear(in_features=768, out_features=3072, bias=True)(intermediate_act_fn): GELUActivation())(output): RobertaOutput((dense): Linear(in_features=3072, out_features=768, bias=True)(LayerNorm): LayerNorm((768,), eps=1e-05, elementwise_affine=True)(dropout): Dropout(p=0.1, inplace=False))))))(classifier): RobertaClassificationHead((dense): Linear(in_features=768, out_features=768, bias=True)(dropout): Dropout(p=0.1, inplace=False)(out_proj): Linear(in_features=768, out_features=2, bias=True)))

Vamos ver sua última camada

model.classifier.out_projCopied

Linear(in_features=768, out_features=2, bias=True)

model.classifier.out_proj.in_features, model.classifier.out_proj.out_featuresCopied

(768, 2)

Vimos que nosso conjunto de dados possui 20 classes, mas este modelo foi treinado para 2 classes, então precisamos modificar a última camada.

model.classifier.out_proj = torch.nn.Linear(in_features=model.classifier.out_proj.in_features, out_features=num_classes, bias=True)model.classifier.out_projCopied

Linear(in_features=768, out_features=20, bias=True)

Agora sim

Agora criamos uma função de perda

loss_function = torch.nn.CrossEntropyLoss()Copied

Um otimizador

from torch.optim import Adamoptimizer = Adam(model.parameters(), lr=5e-4)Copied

E por último, uma métrica

import evaluatemetric = evaluate.load("accuracy")Copied

Vamos a verificar se tudo está bem com uma amostra.

sample = next(iter(dataloader["train"]))Copied

sample["input_ids"].shape, sample["attention_mask"].shapeCopied

(torch.Size([64, 130]), torch.Size([64, 130]))

Agora essa amostra é inserida no modelo

model.to("cuda")ouputs = model(input_ids=sample["input_ids"].to("cuda"), attention_mask=sample["attention_mask"].to("cuda"))ouputs.logits.shapeCopied

torch.Size([64, 20])

Vemos que o modelo gera 64 batches, o que está correto, pois configuramos BS = 20 e cada um com 20 saídas, o que está correto porque alteramos o modelo para que tenha a saída de 20 valores.

Obtemos a de maior valor

predictions = torch.argmax(ouputs.logits, axis=-1)predictions.shapeCopied

torch.Size([64])

Obtemos a loss

loss = loss_function(ouputs.logits, sample["label"].to("cuda"))loss.item()Copied

2.9990389347076416

E a precisão

accuracy = metric.compute(predictions=predictions, references=sample["label"])["accuracy"]accuracyCopied

0.015625

Já podemos criar um pequeno loop de treinamento

from fastprogress.fastprogress import master_bar, progress_barepochs = 1device = torch.device("cuda" if torch.cuda.is_available() else "cpu")model.to(device)master_progress_bar = master_bar(range(epochs))for i in master_progress_bar:model.train()progress_bar_train = progress_bar(dataloader["train"], parent=master_progress_bar)for batch in progress_bar_train:optimizer.zero_grad()input_ids = batch["input_ids"].to(device)attention_mask = batch["attention_mask"].to(device)labels = batch["label"].to(device)outputs = model(input_ids=input_ids, attention_mask=attention_mask)loss = loss_function(outputs['logits'], labels)master_progress_bar.child.comment = f'loss: {loss}'loss.backward()optimizer.step()model.eval()progress_bar_validation = progress_bar(dataloader["validation"], parent=master_progress_bar)for batch in progress_bar_validation:input_ids = batch["input_ids"].to(device)attention_mask = batch["attention_mask"].to(device)labels = batch["label"].to(device)with torch.no_grad():outputs = model(input_ids=input_ids, attention_mask=attention_mask)predictions = torch.argmax(outputs['logits'], axis=-1)accuracy = metric.add_batch(predictions=predictions, references=labels)accuracy = metric.compute()master_progress_bar.main_bar.comment = f"Validation accuracy: {accuracy['accuracy']} "Copied

<IPython.core.display.HTML object>

<IPython.core.display.HTML object>

Script com o código base

Na maioria da documentação do accelerate, é explicado como usar o accelerate com scripts, então por enquanto vamos fazer assim e no final explicaremos como fazê-lo com um notebook.

Primeiro vamos a criar uma pasta na qual vamos guardar os scripts

!mkdir accelerate_scriptsCopied

Agora escrevemos o código base em um script

%%writefile accelerate_scripts/01_code_base.pyimport torchfrom torch.utils.data import DataLoaderfrom torch.optim import Adamfrom datasets import load_datasetfrom transformers import AutoTokenizer, AutoModelForSequenceClassificationimport evaluatefrom fastprogress.fastprogress import master_bar, progress_bardataset = load_dataset("tweet_eval", "emoji")num_classes = len(dataset["train"].info.features["label"].names)max_len = 130checkpoints = "cardiffnlp/twitter-roberta-base-irony"tokenizer = AutoTokenizer.from_pretrained(checkpoints)def tokenize_function(dataset):return tokenizer(dataset["text"], max_length=max_len, padding="max_length", truncation=True, return_tensors="pt")tokenized_dataset = {"train": dataset["train"].map(tokenize_function, batched=True, remove_columns=["text"]),"validation": dataset["validation"].map(tokenize_function, batched=True, remove_columns=["text"]),"test": dataset["test"].map(tokenize_function, batched=True, remove_columns=["text"]),}tokenized_dataset["train"].set_format(type="torch", columns=['input_ids', 'attention_mask', 'label'])tokenized_dataset["validation"].set_format(type="torch", columns=['label', 'input_ids', 'attention_mask'])tokenized_dataset["test"].set_format(type="torch", columns=['label', 'input_ids', 'attention_mask'])BS = 64dataloader = {"train": DataLoader(tokenized_dataset["train"], batch_size=BS, shuffle=True),"validation": DataLoader(tokenized_dataset["validation"], batch_size=BS, shuffle=True),"test": DataLoader(tokenized_dataset["test"], batch_size=BS, shuffle=True),}model = AutoModelForSequenceClassification.from_pretrained(checkpoints)model.classifier.out_proj = torch.nn.Linear(in_features=model.classifier.out_proj.in_features, out_features=num_classes, bias=True)loss_function = torch.nn.CrossEntropyLoss()optimizer = Adam(model.parameters(), lr=5e-4)metric = evaluate.load("accuracy")EPOCHS = 1device = torch.device("cuda" if torch.cuda.is_available() else "cpu")model.to(device)master_progress_bar = master_bar(range(EPOCHS))for i in master_progress_bar:model.train()progress_bar_train = progress_bar(dataloader["train"], parent=master_progress_bar)for batch in progress_bar_train:optimizer.zero_grad()input_ids = batch["input_ids"].to(device)attention_mask = batch["attention_mask"].to(device)labels = batch["label"].to(device)outputs = model(input_ids=input_ids, attention_mask=attention_mask)loss = loss_function(outputs['logits'], labels)master_progress_bar.child.comment = f'loss: {loss}'loss.backward()optimizer.step()model.eval()progress_bar_validation = progress_bar(dataloader["validation"], parent=master_progress_bar)for batch in progress_bar_validation:input_ids = batch["input_ids"].to(device)attention_mask = batch["attention_mask"].to(device)labels = batch["label"].to(device)with torch.no_grad():outputs = model(input_ids=input_ids, attention_mask=attention_mask)predictions = torch.argmax(outputs['logits'], axis=-1)accuracy = metric.add_batch(predictions=predictions, references=labels)accuracy = metric.compute()master_progress_bar.main_bar.comment = f"Validation accuracy: {accuracy['accuracy']} "print(f"Accuracy = {accuracy['accuracy']}")Copied

Overwriting accelerate_scripts/01_code_base.py

E agora o executamos

%%time!python accelerate_scripts/01_code_base.pyCopied

Accuracy = 0.2112CPU times: user 2.12 s, sys: 391 ms, total: 2.51 sWall time: 3min 36s

Vemos que no meu computador levou cerca de 3 minutos e meio

Código com accelerate

Agora substituímos algumas coisas

- Em primeiro lugar, importamos

Acceleratore o inicializamos

from accelerate import Accelerator

acelerador = Acelerador()- Já não fazemos o típico

``` python

torch.device("cuda" if torch.cuda.is_available() else "cpu")

```

- Mas deixamos que seja

acelerateo responsável por escolher o dispositivo através

dispositivo = acelerador.dispositivo- Passamos os elementos relevantes para o treinamento pelo método

preparee não fazemos maismodel.to(device)

model, optimizer, dataloader["train"], dataloader["validation"] = preprare(model, optimizer, dataloader["train"], dataloader["validation"])- Já não enviamos os dados e o modelo para a GPU com

.to(device), pois oacceleratecuidou disso com o métodoprepare

- Em vez de fazer o backpropagation com

loss.backward(), deixamos queacceleratefaça isso com

accelerator.backward(loss)- Na hora de calcular a métrica no loop de validação, precisamos coletar os valores de todos os pontos, em caso de estar fazendo um treinamento distribuído, para isso fazemos

predictions = accelerator.gather_for_metrics(predictions)%%writefile accelerate_scripts/02_accelerate_base_code.pyimport torchfrom torch.utils.data import DataLoaderfrom torch.optim import Adamfrom datasets import load_datasetfrom transformers import AutoTokenizer, AutoModelForSequenceClassificationimport evaluatefrom fastprogress.fastprogress import master_bar, progress_bar# Importamos e inicializamos Acceleratorfrom accelerate import Acceleratoraccelerator = Accelerator()dataset = load_dataset("tweet_eval", "emoji")num_classes = len(dataset["train"].info.features["label"].names)max_len = 130checkpoints = "cardiffnlp/twitter-roberta-base-irony"tokenizer = AutoTokenizer.from_pretrained(checkpoints)def tokenize_function(dataset):return tokenizer(dataset["text"], max_length=max_len, padding="max_length", truncation=True, return_tensors="pt")tokenized_dataset = {"train": dataset["train"].map(tokenize_function, batched=True, remove_columns=["text"]),"validation": dataset["validation"].map(tokenize_function, batched=True, remove_columns=["text"]),"test": dataset["test"].map(tokenize_function, batched=True, remove_columns=["text"]),}tokenized_dataset["train"].set_format(type="torch", columns=['input_ids', 'attention_mask', 'label'])tokenized_dataset["validation"].set_format(type="torch", columns=['label', 'input_ids', 'attention_mask'])tokenized_dataset["test"].set_format(type="torch", columns=['label', 'input_ids', 'attention_mask'])BS = 64dataloader = {"train": DataLoader(tokenized_dataset["train"], batch_size=BS, shuffle=True),"validation": DataLoader(tokenized_dataset["validation"], batch_size=BS, shuffle=True),"test": DataLoader(tokenized_dataset["test"], batch_size=BS, shuffle=True),}model = AutoModelForSequenceClassification.from_pretrained(checkpoints)model.classifier.out_proj = torch.nn.Linear(in_features=model.classifier.out_proj.in_features, out_features=num_classes, bias=True)loss_function = torch.nn.CrossEntropyLoss()optimizer = Adam(model.parameters(), lr=5e-4)metric = evaluate.load("accuracy")EPOCHS = 1# device = torch.device("cuda" if torch.cuda.is_available() else "cpu")device = accelerator.device# model.to(device)model, optimizer, dataloader["train"], dataloader["validation"] = accelerator.prepare(model, optimizer, dataloader["train"], dataloader["validation"])master_progress_bar = master_bar(range(EPOCHS))for i in master_progress_bar:model.train()progress_bar_train = progress_bar(dataloader["train"], parent=master_progress_bar)for batch in progress_bar_train:optimizer.zero_grad()input_ids = batch["input_ids"]#.to(device)attention_mask = batch["attention_mask"]#.to(device)labels = batch["label"]#.to(device)outputs = model(input_ids=input_ids, attention_mask=attention_mask)loss = loss_function(outputs['logits'], labels)master_progress_bar.child.comment = f'loss: {loss}'# loss.backward()accelerator.backward(loss)optimizer.step()print(f"End of training epoch {i}, outputs['logits'].shape: {outputs['logits'].shape}, labels.shape: {labels.shape}")model.eval()progress_bar_validation = progress_bar(dataloader["validation"], parent=master_progress_bar)for batch in progress_bar_validation:input_ids = batch["input_ids"]#.to(device)attention_mask = batch["attention_mask"]#.to(device)labels = batch["label"]#.to(device)with torch.no_grad():outputs = model(input_ids=input_ids, attention_mask=attention_mask)predictions = torch.argmax(outputs['logits'], axis=-1)# Recopilamos las predicciones de todos los dispositivospredictions = accelerator.gather_for_metrics(predictions)labels = accelerator.gather_for_metrics(labels)accuracy = metric.add_batch(predictions=predictions, references=labels)accuracy = metric.compute()print(f"End of validation epoch {i}, outputs['logits'].shape: {outputs['logits'].shape}, labels.shape: {labels.shape}")master_progress_bar.main_bar.comment = f"Validation accuracy: {accuracy['accuracy']} "print(f"Accuracy = {accuracy['accuracy']}")Copied

Overwriting accelerate_scripts/02_accelerate_base_code.py

Se você notar, adicionei estas duas linhas print(f"End of training epoch {i}, outputs['logits'].shape: {outputs['logits'].shape}, labels.shape: {labels.shape}") e a linha print(f"End of validation epoch {i}, outputs['logits'].shape: {outputs['logits'].shape}, labels.shape: {labels.shape}"), adicionei propositalmente porque nos revelarão algo muito importante

Agora o executamos, para executar os scripts de accelerate faz-se com o comando accelerate launch

accelerate launch script.py%%time!accelerate launch accelerate_scripts/02_accelerate_base_code.pyCopied

End of training epoch 0, outputs['logits'].shape: torch.Size([64, 20]), labels.shape: torch.Size([64])End of training epoch 0, outputs['logits'].shape: torch.Size([64, 20]), labels.shape: torch.Size([64])End of validation epoch 0, outputs['logits'].shape: torch.Size([64, 20]), labels.shape: torch.Size([8])Accuracy = 0.206End of validation epoch 0, outputs['logits'].shape: torch.Size([64, 20]), labels.shape: torch.Size([8])Accuracy = 0.206CPU times: user 1.6 s, sys: 272 ms, total: 1.88 sWall time: 2min 37s

Vemos que antes demorava uns 3 minutos e meio e agora demora mais ou menos 2 minutos e meio. Bastante melhoria. Além disso, se observarmos os prints, podemos ver que foram impressos duas vezes.

E isso como pode ser? Bem, porque o accelerate paralelizou o treinamento nas duas GPUs que tenho, por isso foi muito mais rápido.

Além disso, quando executei o primeiro script, ou seja, quando não usei accelerate, a GPU estava quase cheia, enquanto que quando executei o segundo, ou seja, o que usa accelerate, as duas GPUs estavam muito pouco utilizadas, portanto podemos aumentar o batch size para tentar preencher as duas, vamos nisso!

%%writefile accelerate_scripts/03_accelerate_base_code_more_bs.pyimport torchfrom torch.utils.data import DataLoaderfrom torch.optim import Adamfrom datasets import load_datasetfrom transformers import AutoTokenizer, AutoModelForSequenceClassificationimport evaluatefrom fastprogress.fastprogress import master_bar, progress_bar# Importamos e inicializamos Acceleratorfrom accelerate import Acceleratoraccelerator = Accelerator()dataset = load_dataset("tweet_eval", "emoji")num_classes = len(dataset["train"].info.features["label"].names)max_len = 130checkpoints = "cardiffnlp/twitter-roberta-base-irony"tokenizer = AutoTokenizer.from_pretrained(checkpoints)def tokenize_function(dataset):return tokenizer(dataset["text"], max_length=max_len, padding="max_length", truncation=True, return_tensors="pt")tokenized_dataset = {"train": dataset["train"].map(tokenize_function, batched=True, remove_columns=["text"]),"validation": dataset["validation"].map(tokenize_function, batched=True, remove_columns=["text"]),"test": dataset["test"].map(tokenize_function, batched=True, remove_columns=["text"]),}tokenized_dataset["train"].set_format(type="torch", columns=['input_ids', 'attention_mask', 'label'])tokenized_dataset["validation"].set_format(type="torch", columns=['label', 'input_ids', 'attention_mask'])tokenized_dataset["test"].set_format(type="torch", columns=['label', 'input_ids', 'attention_mask'])BS = 128dataloader = {"train": DataLoader(tokenized_dataset["train"], batch_size=BS, shuffle=True),"validation": DataLoader(tokenized_dataset["validation"], batch_size=BS, shuffle=True),"test": DataLoader(tokenized_dataset["test"], batch_size=BS, shuffle=True),}model = AutoModelForSequenceClassification.from_pretrained(checkpoints)model.classifier.out_proj = torch.nn.Linear(in_features=model.classifier.out_proj.in_features, out_features=num_classes, bias=True)loss_function = torch.nn.CrossEntropyLoss()optimizer = Adam(model.parameters(), lr=5e-4)metric = evaluate.load("accuracy")EPOCHS = 1# device = torch.device("cuda" if torch.cuda.is_available() else "cpu")device = accelerator.device# model.to(device)model, optimizer, dataloader["train"], dataloader["validation"] = accelerator.prepare(model, optimizer, dataloader["train"], dataloader["validation"])master_progress_bar = master_bar(range(EPOCHS))for i in master_progress_bar:model.train()progress_bar_train = progress_bar(dataloader["train"], parent=master_progress_bar)for batch in progress_bar_train:optimizer.zero_grad()input_ids = batch["input_ids"]#.to(device)attention_mask = batch["attention_mask"]#.to(device)labels = batch["label"]#.to(device)outputs = model(input_ids=input_ids, attention_mask=attention_mask)loss = loss_function(outputs['logits'], labels)master_progress_bar.child.comment = f'loss: {loss}'# loss.backward()accelerator.backward(loss)optimizer.step()model.eval()progress_bar_validation = progress_bar(dataloader["validation"], parent=master_progress_bar)for batch in progress_bar_validation:input_ids = batch["input_ids"]#.to(device)attention_mask = batch["attention_mask"]#.to(device)labels = batch["label"]#.to(device)with torch.no_grad():outputs = model(input_ids=input_ids, attention_mask=attention_mask)predictions = torch.argmax(outputs['logits'], axis=-1)# Recopilamos las predicciones de todos los dispositivospredictions = accelerator.gather_for_metrics(predictions)labels = accelerator.gather_for_metrics(labels)accuracy = metric.add_batch(predictions=predictions, references=labels)accuracy = metric.compute()master_progress_bar.main_bar.comment = f"Validation accuracy: {accuracy['accuracy']} "print(f"Accuracy = {accuracy['accuracy']}")Copied

Overwriting accelerate_scripts/03_accelerate_base_code_more_bs.py

Removi os prints extras, pois já vimos que o código está sendo executado nas duas GPUs e aumentei o batch size de 64 para 128. Vamos executar para ver.

%%time!accelerate launch accelerate_scripts/03_accelerate_base_code_more_bs.pyCopied

Accuracy = 0.1052Accuracy = 0.1052CPU times: user 1.41 s, sys: 180 ms, total: 1.59 sWall time: 2min 22s

Aumentando o tamanho do batch reduziu alguns segundos o tempo de execução

Execução de processos

Execução de código em um único processo

Antes vimos que os prints eram impressos duas vezes, isso ocorre porque o accelerate cria tantos processos quantos dispositivos onde o código é executado, no meu caso ele cria dois processos por ter duas GPUs.

No entanto, nem todo o código deve ser executado em todos os processos, por exemplo, os prints, desaceleram muito o código, como para executá-lo várias vezes, se forem salvos os checkpoints, seriam salvos duas vezes, etc.

Para poder executar parte de um código em um único processo, tem que ser encapsulada em uma função e decorada com accelerator.on_local_main_process. Por exemplo, no seguinte código você vai ver que criei a seguinte função

@accelerator.on_local_main_process

def print_something(something):

print(algo)Outra opção é incluir o código dentro de um if accelerator.is_local_main_process como no seguinte código

if accelerator.is_local_main_process:

print("Algo")%%writefile accelerate_scripts/04_accelerate_base_code_some_code_in_one_process.pyimport torchfrom torch.utils.data import DataLoaderfrom torch.optim import Adamfrom datasets import load_datasetfrom transformers import AutoTokenizer, AutoModelForSequenceClassificationimport evaluatefrom fastprogress.fastprogress import master_bar, progress_bar# Importamos e inicializamos Acceleratorfrom accelerate import Acceleratoraccelerator = Accelerator()dataset = load_dataset("tweet_eval", "emoji")num_classes = len(dataset["train"].info.features["label"].names)max_len = 130checkpoints = "cardiffnlp/twitter-roberta-base-irony"tokenizer = AutoTokenizer.from_pretrained(checkpoints)def tokenize_function(dataset):return tokenizer(dataset["text"], max_length=max_len, padding="max_length", truncation=True, return_tensors="pt")tokenized_dataset = {"train": dataset["train"].map(tokenize_function, batched=True, remove_columns=["text"]),"validation": dataset["validation"].map(tokenize_function, batched=True, remove_columns=["text"]),"test": dataset["test"].map(tokenize_function, batched=True, remove_columns=["text"]),}tokenized_dataset["train"].set_format(type="torch", columns=['input_ids', 'attention_mask', 'label'])tokenized_dataset["validation"].set_format(type="torch", columns=['label', 'input_ids', 'attention_mask'])tokenized_dataset["test"].set_format(type="torch", columns=['label', 'input_ids', 'attention_mask'])BS = 128dataloader = {"train": DataLoader(tokenized_dataset["train"], batch_size=BS, shuffle=True),"validation": DataLoader(tokenized_dataset["validation"], batch_size=BS, shuffle=True),"test": DataLoader(tokenized_dataset["test"], batch_size=BS, shuffle=True),}model = AutoModelForSequenceClassification.from_pretrained(checkpoints)model.classifier.out_proj = torch.nn.Linear(in_features=model.classifier.out_proj.in_features, out_features=num_classes, bias=True)loss_function = torch.nn.CrossEntropyLoss()optimizer = Adam(model.parameters(), lr=5e-4)metric = evaluate.load("accuracy")EPOCHS = 1# device = torch.device("cuda" if torch.cuda.is_available() else "cpu")device = accelerator.device# model.to(device)model, optimizer, dataloader["train"], dataloader["validation"] = accelerator.prepare(model, optimizer, dataloader["train"], dataloader["validation"])@accelerator.on_local_main_processdef print_something(something):print(something)master_progress_bar = master_bar(range(EPOCHS))for i in master_progress_bar:model.train()progress_bar_train = progress_bar(dataloader["train"], parent=master_progress_bar)for batch in progress_bar_train:optimizer.zero_grad()input_ids = batch["input_ids"]#.to(device)attention_mask = batch["attention_mask"]#.to(device)labels = batch["label"]#.to(device)outputs = model(input_ids=input_ids, attention_mask=attention_mask)loss = loss_function(outputs['logits'], labels)master_progress_bar.child.comment = f'loss: {loss}'# loss.backward()accelerator.backward(loss)optimizer.step()model.eval()progress_bar_validation = progress_bar(dataloader["validation"], parent=master_progress_bar)for batch in progress_bar_validation:input_ids = batch["input_ids"]#.to(device)attention_mask = batch["attention_mask"]#.to(device)labels = batch["label"]#.to(device)with torch.no_grad():outputs = model(input_ids=input_ids, attention_mask=attention_mask)predictions = torch.argmax(outputs['logits'], axis=-1)# Recopilamos las predicciones de todos los dispositivospredictions = accelerator.gather_for_metrics(predictions)labels = accelerator.gather_for_metrics(labels)accuracy = metric.add_batch(predictions=predictions, references=labels)accuracy = metric.compute()master_progress_bar.main_bar.comment = f"Validation accuracy: {accuracy['accuracy']} "# print(f"Accuracy = {accuracy['accuracy']}")print_something(f"Accuracy = {accuracy['accuracy']}")if accelerator.is_local_main_process:print(f"End of script with {accuracy['accuracy']} accuracy")Copied

Overwriting accelerate_scripts/04_accelerate_base_code_some_code_in_one_process.py

Vamos executá-lo para ver.

%%time!accelerate launch accelerate_scripts/04_accelerate_base_code_some_code_in_one_process.pyCopied

Accuracy = 0.2098End of script with 0.2098 accuracyCPU times: user 1.38 s, sys: 197 ms, total: 1.58 sWall time: 2min 22s

Agora o print foi impresso apenas uma vez

No entanto, embora não seja muito visível, as barras de progresso são executadas em cada processo.

Não encontrei uma maneira de evitar isso com as barras de progresso do fastprogress, mas sim com as do tqdm, então vou substituir as barras de progresso do fastprogress pelas do tqdm e para que se executem em um único processo é necessário adicionar o argumento disable=not accelerator.is_local_main_process

%%writefile accelerate_scripts/05_accelerate_base_code_some_code_in_one_process.pyimport torchfrom torch.utils.data import DataLoaderfrom torch.optim import Adamfrom datasets import load_datasetfrom transformers import AutoTokenizer, AutoModelForSequenceClassificationimport evaluateimport tqdm# Importamos e inicializamos Acceleratorfrom accelerate import Acceleratoraccelerator = Accelerator()dataset = load_dataset("tweet_eval", "emoji")num_classes = len(dataset["train"].info.features["label"].names)max_len = 130checkpoints = "cardiffnlp/twitter-roberta-base-irony"tokenizer = AutoTokenizer.from_pretrained(checkpoints)def tokenize_function(dataset):return tokenizer(dataset["text"], max_length=max_len, padding="max_length", truncation=True, return_tensors="pt")tokenized_dataset = {"train": dataset["train"].map(tokenize_function, batched=True, remove_columns=["text"]),"validation": dataset["validation"].map(tokenize_function, batched=True, remove_columns=["text"]),"test": dataset["test"].map(tokenize_function, batched=True, remove_columns=["text"]),}tokenized_dataset["train"].set_format(type="torch", columns=['input_ids', 'attention_mask', 'label'])tokenized_dataset["validation"].set_format(type="torch", columns=['label', 'input_ids', 'attention_mask'])tokenized_dataset["test"].set_format(type="torch", columns=['label', 'input_ids', 'attention_mask'])BS = 128dataloader = {"train": DataLoader(tokenized_dataset["train"], batch_size=BS, shuffle=True),"validation": DataLoader(tokenized_dataset["validation"], batch_size=BS, shuffle=True),"test": DataLoader(tokenized_dataset["test"], batch_size=BS, shuffle=True),}model = AutoModelForSequenceClassification.from_pretrained(checkpoints)model.classifier.out_proj = torch.nn.Linear(in_features=model.classifier.out_proj.in_features, out_features=num_classes, bias=True)loss_function = torch.nn.CrossEntropyLoss()optimizer = Adam(model.parameters(), lr=5e-4)metric = evaluate.load("accuracy")EPOCHS = 1# device = torch.device("cuda" if torch.cuda.is_available() else "cpu")device = accelerator.device# model.to(device)model, optimizer, dataloader["train"], dataloader["validation"] = accelerator.prepare(model, optimizer, dataloader["train"], dataloader["validation"])@accelerator.on_local_main_processdef print_something(something):print(something)for i in range(EPOCHS):model.train()# progress_bar_train = progress_bar(dataloader["train"], parent=master_progress_bar)progress_bar_train = tqdm.tqdm(dataloader["train"], disable=not accelerator.is_local_main_process)for batch in progress_bar_train:optimizer.zero_grad()input_ids = batch["input_ids"]#.to(device)attention_mask = batch["attention_mask"]#.to(device)labels = batch["label"]#.to(device)outputs = model(input_ids=input_ids, attention_mask=attention_mask)loss = loss_function(outputs['logits'], labels)# master_progress_bar.child.comment = f'loss: {loss}'# loss.backward()accelerator.backward(loss)optimizer.step()model.eval()# progress_bar_validation = progress_bar(dataloader["validation"], parent=master_progress_bar)progress_bar_validation = tqdm.tqdm(dataloader["validation"], disable=not accelerator.is_local_main_process)for batch in progress_bar_validation:input_ids = batch["input_ids"]#.to(device)attention_mask = batch["attention_mask"]#.to(device)labels = batch["label"]#.to(device)with torch.no_grad():outputs = model(input_ids=input_ids, attention_mask=attention_mask)predictions = torch.argmax(outputs['logits'], axis=-1)# Recopilamos las predicciones de todos los dispositivospredictions = accelerator.gather_for_metrics(predictions)labels = accelerator.gather_for_metrics(labels)accuracy = metric.add_batch(predictions=predictions, references=labels)accuracy = metric.compute()# print(f"Accuracy = {accuracy['accuracy']}")print_something(f"Accuracy = {accuracy['accuracy']}")if accelerator.is_local_main_process:print(f"End of script with {accuracy['accuracy']} accuracy")Copied

Overwriting accelerate_scripts/05_accelerate_base_code_some_code_in_one_process.py

%%time!accelerate launch accelerate_scripts/05_accelerate_base_code_some_code_in_one_process.pyCopied

100%|█████████████████████████████████████████| 176/176 [02:01<00:00, 1.45it/s]100%|███████████████████████████████████████████| 20/20 [00:06<00:00, 3.30it/s]Accuracy = 0.2166End of script with 0.2166 accuracyCPU times: user 1.33 s, sys: 195 ms, total: 1.52 sWall time: 2min 22s

Nós mostramos um exemplo de como imprimir em um único processo, e isso foi uma maneira de executar processos em um único processo. Mas se o que você quer é apenas imprimir em um único processo, pode-se usar o método print do accelerate. Vamos ver o mesmo exemplo de antes com esse método.

%%writefile accelerate_scripts/06_accelerate_base_code_print_one_process.pyimport torchfrom torch.utils.data import DataLoaderfrom torch.optim import Adamfrom datasets import load_datasetfrom transformers import AutoTokenizer, AutoModelForSequenceClassificationimport evaluateimport tqdm# Importamos e inicializamos Acceleratorfrom accelerate import Acceleratoraccelerator = Accelerator()dataset = load_dataset("tweet_eval", "emoji")num_classes = len(dataset["train"].info.features["label"].names)max_len = 130checkpoints = "cardiffnlp/twitter-roberta-base-irony"tokenizer = AutoTokenizer.from_pretrained(checkpoints)def tokenize_function(dataset):return tokenizer(dataset["text"], max_length=max_len, padding="max_length", truncation=True, return_tensors="pt")tokenized_dataset = {"train": dataset["train"].map(tokenize_function, batched=True, remove_columns=["text"]),"validation": dataset["validation"].map(tokenize_function, batched=True, remove_columns=["text"]),"test": dataset["test"].map(tokenize_function, batched=True, remove_columns=["text"]),}tokenized_dataset["train"].set_format(type="torch", columns=['input_ids', 'attention_mask', 'label'])tokenized_dataset["validation"].set_format(type="torch", columns=['label', 'input_ids', 'attention_mask'])tokenized_dataset["test"].set_format(type="torch", columns=['label', 'input_ids', 'attention_mask'])BS = 128dataloader = {"train": DataLoader(tokenized_dataset["train"], batch_size=BS, shuffle=True),"validation": DataLoader(tokenized_dataset["validation"], batch_size=BS, shuffle=True),"test": DataLoader(tokenized_dataset["test"], batch_size=BS, shuffle=True),}model = AutoModelForSequenceClassification.from_pretrained(checkpoints)model.classifier.out_proj = torch.nn.Linear(in_features=model.classifier.out_proj.in_features, out_features=num_classes, bias=True)loss_function = torch.nn.CrossEntropyLoss()optimizer = Adam(model.parameters(), lr=5e-4)metric = evaluate.load("accuracy")EPOCHS = 1# device = torch.device("cuda" if torch.cuda.is_available() else "cpu")device = accelerator.device# model.to(device)model, optimizer, dataloader["train"], dataloader["validation"] = accelerator.prepare(model, optimizer, dataloader["train"], dataloader["validation"])for i in range(EPOCHS):model.train()# progress_bar_train = progress_bar(dataloader["train"], parent=master_progress_bar)progress_bar_train = tqdm.tqdm(dataloader["train"], disable=not accelerator.is_local_main_process)for batch in progress_bar_train:optimizer.zero_grad()input_ids = batch["input_ids"]#.to(device)attention_mask = batch["attention_mask"]#.to(device)labels = batch["label"]#.to(device)outputs = model(input_ids=input_ids, attention_mask=attention_mask)loss = loss_function(outputs['logits'], labels)# master_progress_bar.child.comment = f'loss: {loss}'# loss.backward()accelerator.backward(loss)optimizer.step()model.eval()# progress_bar_validation = progress_bar(dataloader["validation"], parent=master_progress_bar)progress_bar_validation = tqdm.tqdm(dataloader["validation"], disable=not accelerator.is_local_main_process)for batch in progress_bar_validation:input_ids = batch["input_ids"]#.to(device)attention_mask = batch["attention_mask"]#.to(device)labels = batch["label"]#.to(device)with torch.no_grad():outputs = model(input_ids=input_ids, attention_mask=attention_mask)predictions = torch.argmax(outputs['logits'], axis=-1)# Recopilamos las predicciones de todos los dispositivospredictions = accelerator.gather_for_metrics(predictions)labels = accelerator.gather_for_metrics(labels)accuracy = metric.add_batch(predictions=predictions, references=labels)accuracy = metric.compute()# print(f"Accuracy = {accuracy['accuracy']}")accelerator.print(f"Accuracy = {accuracy['accuracy']}")if accelerator.is_local_main_process:print(f"End of script with {accuracy['accuracy']} accuracy")Copied

Writing accelerate_scripts/06_accelerate_base_code_print_one_process.py

O executamos

%%time!accelerate launch accelerate_scripts/06_accelerate_base_code_print_one_process.pyCopied

Map: 100%|██████████████████████| 45000/45000 [00:02<00:00, 15433.52 examples/s]Map: 100%|████████████████████████| 5000/5000 [00:00<00:00, 11406.61 examples/s]Map: 100%|██████████████████████| 45000/45000 [00:02<00:00, 15036.87 examples/s]Map: 100%|██████████████████████| 50000/50000 [00:03<00:00, 14932.76 examples/s]Map: 100%|██████████████████████| 50000/50000 [00:03<00:00, 14956.60 examples/s]100%|█████████████████████████████████████████| 176/176 [02:00<00:00, 1.46it/s]100%|███████████████████████████████████████████| 20/20 [00:05<00:00, 3.33it/s]Accuracy = 0.2134End of script with 0.2134 accuracyCPU times: user 1.4 s, sys: 189 ms, total: 1.59 sWall time: 2min 27s

Execução de código em todos os processos

No entanto, há código que deve ser executado em todos os processos, por exemplo, se subirmos os checkpoints para o hub, então aqui temos duas opções: encapsular o código em uma função e decorá-la com accelerator.on_main_process

@accelerator.on_main_process

def fazer_minha_cozinha():

"Algo feito uma vez por servidor"

do_thing_once()ou colocar o código dentro de um if accelerator.is_main_process

if accelerator.is_main_process:

repo.push_to_hub()Como estamos fazendo treinamentos apenas para mostrar a biblioteca accelerate e o modelo que estamos treinando não é bom, não faz sentido agora fazer upload dos checkpoints para o hub, então vou fazer um exemplo com prints

%%writefile accelerate_scripts/07_accelerate_base_code_some_code_in_all_process.pyimport torchfrom torch.utils.data import DataLoaderfrom torch.optim import Adamfrom datasets import load_datasetfrom transformers import AutoTokenizer, AutoModelForSequenceClassificationimport evaluateimport tqdm# Importamos e inicializamos Acceleratorfrom accelerate import Acceleratoraccelerator = Accelerator()dataset = load_dataset("tweet_eval", "emoji")num_classes = len(dataset["train"].info.features["label"].names)max_len = 130checkpoints = "cardiffnlp/twitter-roberta-base-irony"tokenizer = AutoTokenizer.from_pretrained(checkpoints)def tokenize_function(dataset):return tokenizer(dataset["text"], max_length=max_len, padding="max_length", truncation=True, return_tensors="pt")tokenized_dataset = {"train": dataset["train"].map(tokenize_function, batched=True, remove_columns=["text"]),"validation": dataset["validation"].map(tokenize_function, batched=True, remove_columns=["text"]),"test": dataset["test"].map(tokenize_function, batched=True, remove_columns=["text"]),}tokenized_dataset["train"].set_format(type="torch", columns=['input_ids', 'attention_mask', 'label'])tokenized_dataset["validation"].set_format(type="torch", columns=['label', 'input_ids', 'attention_mask'])tokenized_dataset["test"].set_format(type="torch", columns=['label', 'input_ids', 'attention_mask'])BS = 128dataloader = {"train": DataLoader(tokenized_dataset["train"], batch_size=BS, shuffle=True),"validation": DataLoader(tokenized_dataset["validation"], batch_size=BS, shuffle=True),"test": DataLoader(tokenized_dataset["test"], batch_size=BS, shuffle=True),}model = AutoModelForSequenceClassification.from_pretrained(checkpoints)model.classifier.out_proj = torch.nn.Linear(in_features=model.classifier.out_proj.in_features, out_features=num_classes, bias=True)loss_function = torch.nn.CrossEntropyLoss()optimizer = Adam(model.parameters(), lr=5e-4)metric = evaluate.load("accuracy")EPOCHS = 1# device = torch.device("cuda" if torch.cuda.is_available() else "cpu")device = accelerator.device# model.to(device)model, optimizer, dataloader["train"], dataloader["validation"] = accelerator.prepare(model, optimizer, dataloader["train"], dataloader["validation"])@accelerator.on_local_main_processdef print_in_one_process(something):print(something)@accelerator.on_main_processdef print_in_all_processes(something):print(something)for i in range(EPOCHS):model.train()progress_bar_train = tqdm.tqdm(dataloader["train"], disable=not accelerator.is_local_main_process)for batch in progress_bar_train:optimizer.zero_grad()input_ids = batch["input_ids"]#.to(device)attention_mask = batch["attention_mask"]#.to(device)labels = batch["label"]#.to(device)outputs = model(input_ids=input_ids, attention_mask=attention_mask)loss = loss_function(outputs['logits'], labels)# loss.backward()accelerator.backward(loss)optimizer.step()model.eval()progress_bar_validation = tqdm.tqdm(dataloader["validation"], disable=not accelerator.is_local_main_process)for batch in progress_bar_validation:input_ids = batch["input_ids"]#.to(device)attention_mask = batch["attention_mask"]#.to(device)labels = batch["label"]#.to(device)with torch.no_grad():outputs = model(input_ids=input_ids, attention_mask=attention_mask)predictions = torch.argmax(outputs['logits'], axis=-1)# Recopilamos las predicciones de todos los dispositivospredictions = accelerator.gather_for_metrics(predictions)labels = accelerator.gather_for_metrics(labels)accuracy = metric.add_batch(predictions=predictions, references=labels)accuracy = metric.compute()print_in_one_process(f"Accuracy = {accuracy['accuracy']}")if accelerator.is_local_main_process:print(f"End of script with {accuracy['accuracy']} accuracy")print_in_all_processes(f"All process: Accuracy = {accuracy['accuracy']}")if accelerator.is_main_process:print(f"All process: End of script with {accuracy['accuracy']} accuracy")Copied

Overwriting accelerate_scripts/06_accelerate_base_code_some_code_in_all_process.py

Vamos executá-lo para ver.

%%time!accelerate launch accelerate_scripts/07_accelerate_base_code_some_code_in_all_process.pyCopied

Map: 100%|██████████████████████| 45000/45000 [00:03<00:00, 14518.44 examples/s]Map: 100%|██████████████████████| 45000/45000 [00:03<00:00, 14368.77 examples/s]Map: 100%|████████████████████████| 5000/5000 [00:00<00:00, 16466.33 examples/s]Map: 100%|████████████████████████| 5000/5000 [00:00<00:00, 14806.14 examples/s]Map: 100%|██████████████████████| 50000/50000 [00:03<00:00, 14253.33 examples/s]Map: 100%|██████████████████████| 50000/50000 [00:03<00:00, 14337.07 examples/s]100%|█████████████████████████████████████████| 176/176 [02:00<00:00, 1.46it/s]100%|███████████████████████████████████████████| 20/20 [00:05<00:00, 3.34it/s]Accuracy = 0.2092End of script with 0.2092 accuracyAll process: Accuracy = 0.2092All process: End of script with 0.2092 accuracyCPU times: user 1.42 s, sys: 216 ms, total: 1.64 sWall time: 2min 27s

Execução de código no processo X

Por último, podemos especificar em qual processo queremos executar o código. Para isso, é necessário criar uma função e decorá-la com @accelerator.on_process(process_index=0)

@accelerator.on_process(process_index=0)

def fazer_minha_coisa():

"Algo feito no índice do processo 0"

do_thing_on_index_zero()ou decorá-la com @accelerator.on_local_process(local_process_idx=0)

@accelerator.on_local_process(local_process_index=0)def fazer_minha_coisa():

"Algo feito no índice do processo 0 em cada servidor"

do_thing_on_index_zero_on_each_server()Aqui coloquei o processo 0, mas pode ser qualquer número

%%writefile accelerate_scripts/08_accelerate_base_code_some_code_in_some_process.pyimport torchfrom torch.utils.data import DataLoaderfrom torch.optim import Adamfrom datasets import load_datasetfrom transformers import AutoTokenizer, AutoModelForSequenceClassificationimport evaluateimport tqdm# Importamos e inicializamos Acceleratorfrom accelerate import Acceleratoraccelerator = Accelerator()dataset = load_dataset("tweet_eval", "emoji")num_classes = len(dataset["train"].info.features["label"].names)max_len = 130checkpoints = "cardiffnlp/twitter-roberta-base-irony"tokenizer = AutoTokenizer.from_pretrained(checkpoints)def tokenize_function(dataset):return tokenizer(dataset["text"], max_length=max_len, padding="max_length", truncation=True, return_tensors="pt")tokenized_dataset = {"train": dataset["train"].map(tokenize_function, batched=True, remove_columns=["text"]),"validation": dataset["validation"].map(tokenize_function, batched=True, remove_columns=["text"]),"test": dataset["test"].map(tokenize_function, batched=True, remove_columns=["text"]),}tokenized_dataset["train"].set_format(type="torch", columns=['input_ids', 'attention_mask', 'label'])tokenized_dataset["validation"].set_format(type="torch", columns=['label', 'input_ids', 'attention_mask'])tokenized_dataset["test"].set_format(type="torch", columns=['label', 'input_ids', 'attention_mask'])BS = 128dataloader = {"train": DataLoader(tokenized_dataset["train"], batch_size=BS, shuffle=True),"validation": DataLoader(tokenized_dataset["validation"], batch_size=BS, shuffle=True),"test": DataLoader(tokenized_dataset["test"], batch_size=BS, shuffle=True),}model = AutoModelForSequenceClassification.from_pretrained(checkpoints)model.classifier.out_proj = torch.nn.Linear(in_features=model.classifier.out_proj.in_features, out_features=num_classes, bias=True)loss_function = torch.nn.CrossEntropyLoss()optimizer = Adam(model.parameters(), lr=5e-4)metric = evaluate.load("accuracy")EPOCHS = 1# device = torch.device("cuda" if torch.cuda.is_available() else "cpu")device = accelerator.device# model.to(device)model, optimizer, dataloader["train"], dataloader["validation"] = accelerator.prepare(model, optimizer, dataloader["train"], dataloader["validation"])@accelerator.on_local_main_processdef print_in_one_process(something):print(something)@accelerator.on_main_processdef print_in_all_processes(something):print(something)@accelerator.on_process(process_index=0)def print_in_process_0(something):print("Process 0: " + something)@accelerator.on_local_process(local_process_index=1)def print_in_process_1(something):print("Process 1: " + something)for i in range(EPOCHS):model.train()progress_bar_train = tqdm.tqdm(dataloader["train"], disable=not accelerator.is_local_main_process)for batch in progress_bar_train:optimizer.zero_grad()input_ids = batch["input_ids"]#.to(device)attention_mask = batch["attention_mask"]#.to(device)labels = batch["label"]#.to(device)outputs = model(input_ids=input_ids, attention_mask=attention_mask)loss = loss_function(outputs['logits'], labels)# loss.backward()accelerator.backward(loss)optimizer.step()model.eval()progress_bar_validation = tqdm.tqdm(dataloader["validation"], disable=not accelerator.is_local_main_process)for batch in progress_bar_validation:input_ids = batch["input_ids"]#.to(device)attention_mask = batch["attention_mask"]#.to(device)labels = batch["label"]#.to(device)with torch.no_grad():outputs = model(input_ids=input_ids, attention_mask=attention_mask)predictions = torch.argmax(outputs['logits'], axis=-1)# Recopilamos las predicciones de todos los dispositivospredictions = accelerator.gather_for_metrics(predictions)labels = accelerator.gather_for_metrics(labels)accuracy = metric.add_batch(predictions=predictions, references=labels)accuracy = metric.compute()print_in_one_process(f"Accuracy = {accuracy['accuracy']}")if accelerator.is_local_main_process:print(f"End of script with {accuracy['accuracy']} accuracy")print_in_all_processes(f"All process: Accuracy = {accuracy['accuracy']}")if accelerator.is_main_process:print(f"All process: End of script with {accuracy['accuracy']} accuracy")print_in_process_0("End of process 0")print_in_process_1("End of process 1")Copied

Overwriting accelerate_scripts/07_accelerate_base_code_some_code_in_some_process.py

O executamos

%%time!accelerate launch accelerate_scripts/08_accelerate_base_code_some_code_in_some_process.pyCopied

Map: 100%|████████████████████████| 5000/5000 [00:00<00:00, 15735.58 examples/s]Map: 100%|██████████████████████| 50000/50000 [00:03<00:00, 14906.20 examples/s]100%|█████████████████████████████████████████| 176/176 [02:02<00:00, 1.44it/s]100%|███████████████████████████████████████████| 20/20 [00:06<00:00, 3.27it/s]Process 1: End of process 1Accuracy = 0.2128End of script with 0.2128 accuracyAll process: Accuracy = 0.2128All process: End of script with 0.2128 accuracyProcess 0: End of process 0CPU times: user 1.42 s, sys: 295 ms, total: 1.71 sWall time: 2min 37s

Sincronizar processos

Se temos código que deve ser executado em todos os processos, é interessante esperar que termine em todos os processos antes de fazer outra tarefa, então para isso usamos accelerator.wait_for_everyone()

Para vê-lo, vamos introduzir um atraso em uma das funções de impressão em um processo.

Além disso, coloquei um break no loop de treinamento para que não fique treinando por muito tempo, o que não é o que nos interessa agora.

%%writefile accelerate_scripts/09_accelerate_base_code_sync_all_process.pyimport torchfrom torch.utils.data import DataLoaderfrom torch.optim import Adamfrom datasets import load_datasetfrom transformers import AutoTokenizer, AutoModelForSequenceClassificationimport evaluateimport tqdmimport time# Importamos e inicializamos Acceleratorfrom accelerate import Acceleratoraccelerator = Accelerator()dataset = load_dataset("tweet_eval", "emoji")num_classes = len(dataset["train"].info.features["label"].names)max_len = 130checkpoints = "cardiffnlp/twitter-roberta-base-irony"tokenizer = AutoTokenizer.from_pretrained(checkpoints)def tokenize_function(dataset):return tokenizer(dataset["text"], max_length=max_len, padding="max_length", truncation=True, return_tensors="pt")tokenized_dataset = {"train": dataset["train"].map(tokenize_function, batched=True, remove_columns=["text"]),"validation": dataset["validation"].map(tokenize_function, batched=True, remove_columns=["text"]),"test": dataset["test"].map(tokenize_function, batched=True, remove_columns=["text"]),}tokenized_dataset["train"].set_format(type="torch", columns=['input_ids', 'attention_mask', 'label'])tokenized_dataset["validation"].set_format(type="torch", columns=['label', 'input_ids', 'attention_mask'])tokenized_dataset["test"].set_format(type="torch", columns=['label', 'input_ids', 'attention_mask'])BS = 128dataloader = {"train": DataLoader(tokenized_dataset["train"], batch_size=BS, shuffle=True),"validation": DataLoader(tokenized_dataset["validation"], batch_size=BS, shuffle=True),"test": DataLoader(tokenized_dataset["test"], batch_size=BS, shuffle=True),}model = AutoModelForSequenceClassification.from_pretrained(checkpoints)model.classifier.out_proj = torch.nn.Linear(in_features=model.classifier.out_proj.in_features, out_features=num_classes, bias=True)loss_function = torch.nn.CrossEntropyLoss()optimizer = Adam(model.parameters(), lr=5e-4)metric = evaluate.load("accuracy")EPOCHS = 1# device = torch.device("cuda" if torch.cuda.is_available() else "cpu")device = accelerator.device# model.to(device)model, optimizer, dataloader["train"], dataloader["validation"] = accelerator.prepare(model, optimizer, dataloader["train"], dataloader["validation"])@accelerator.on_local_main_processdef print_in_one_process(something):print(something)@accelerator.on_main_processdef print_in_all_processes(something):print(something)@accelerator.on_process(process_index=0)def print_in_process_0(something):time.sleep(2)print("Process 0: " + something)@accelerator.on_local_process(local_process_index=1)def print_in_process_1(something):print("Process 1: " + something)for i in range(EPOCHS):model.train()progress_bar_train = tqdm.tqdm(dataloader["train"], disable=not accelerator.is_local_main_process)for batch in progress_bar_train:optimizer.zero_grad()input_ids = batch["input_ids"]#.to(device)attention_mask = batch["attention_mask"]#.to(device)labels = batch["label"]#.to(device)outputs = model(input_ids=input_ids, attention_mask=attention_mask)loss = loss_function(outputs['logits'], labels)# loss.backward()accelerator.backward(loss)optimizer.step()breakmodel.eval()progress_bar_validation = tqdm.tqdm(dataloader["validation"], disable=not accelerator.is_local_main_process)for batch in progress_bar_validation:input_ids = batch["input_ids"]#.to(device)attention_mask = batch["attention_mask"]#.to(device)labels = batch["label"]#.to(device)with torch.no_grad():outputs = model(input_ids=input_ids, attention_mask=attention_mask)predictions = torch.argmax(outputs['logits'], axis=-1)# Recopilamos las predicciones de todos los dispositivospredictions = accelerator.gather_for_metrics(predictions)labels = accelerator.gather_for_metrics(labels)accuracy = metric.add_batch(predictions=predictions, references=labels)accuracy = metric.compute()print_in_one_process(f"Accuracy = {accuracy['accuracy']}")if accelerator.is_local_main_process:print(f"End of script with {accuracy['accuracy']} accuracy")print_in_all_processes(f"All process: Accuracy = {accuracy['accuracy']}")if accelerator.is_main_process:print(f"All process: End of script with {accuracy['accuracy']} accuracy")print_in_one_process("Printing with delay in process 0")print_in_process_0("End of process 0")print_in_process_1("End of process 1")accelerator.wait_for_everyone()print_in_one_process("End of script")Copied

Overwriting accelerate_scripts/08_accelerate_base_code_sync_all_process.py

O executamos

!accelerate launch accelerate_scripts/09_accelerate_base_code_sync_all_process.pyCopied

Map: 100%|████████████████████████| 5000/5000 [00:00<00:00, 14218.23 examples/s]Map: 100%|████████████████████████| 5000/5000 [00:00<00:00, 14666.25 examples/s]0%| | 0/176 [00:00<?, ?it/s]100%|███████████████████████████████████████████| 20/20 [00:05<00:00, 3.58it/s]Process 1: End of process 1Accuracy = 0.212End of script with 0.212 accuracyAll process: Accuracy = 0.212All process: End of script with 0.212 accuracyPrinting with delay in process 0Process 0: End of process 0End of script

Como pode ser visto primeiro foi impresso Process 1: End of process 1 e depois o resto, isso é porque o resto dos prints são feitos ou no processo 0 ou em todos os processos, então até que não termine o atraso de 2 segundos que colocamos, o resto do código não é executado.

Salvar e carregar o state dict

Quando treinamos, às vezes salvamos o estado para poder continuar em outro momento

Para salvar o estado teremos que usar os métodos save_state() e load_state()

%%writefile accelerate_scripts/10_accelerate_save_and_load_checkpoints.pyimport torchfrom torch.utils.data import DataLoaderfrom torch.optim import Adamfrom datasets import load_datasetfrom transformers import AutoTokenizer, AutoModelForSequenceClassificationimport evaluateimport tqdm# Importamos e inicializamos Acceleratorfrom accelerate import Acceleratoraccelerator = Accelerator()dataset = load_dataset("tweet_eval", "emoji")num_classes = len(dataset["train"].info.features["label"].names)max_len = 130checkpoints = "cardiffnlp/twitter-roberta-base-irony"tokenizer = AutoTokenizer.from_pretrained(checkpoints)def tokenize_function(dataset):return tokenizer(dataset["text"], max_length=max_len, padding="max_length", truncation=True, return_tensors="pt")tokenized_dataset = {"train": dataset["train"].map(tokenize_function, batched=True, remove_columns=["text"]),"validation": dataset["validation"].map(tokenize_function, batched=True, remove_columns=["text"]),"test": dataset["test"].map(tokenize_function, batched=True, remove_columns=["text"]),}tokenized_dataset["train"].set_format(type="torch", columns=['input_ids', 'attention_mask', 'label'])tokenized_dataset["validation"].set_format(type="torch", columns=['label', 'input_ids', 'attention_mask'])tokenized_dataset["test"].set_format(type="torch", columns=['label', 'input_ids', 'attention_mask'])BS = 128dataloader = {"train": DataLoader(tokenized_dataset["train"], batch_size=BS, shuffle=True),"validation": DataLoader(tokenized_dataset["validation"], batch_size=BS, shuffle=True),"test": DataLoader(tokenized_dataset["test"], batch_size=BS, shuffle=True),}model = AutoModelForSequenceClassification.from_pretrained(checkpoints)model.classifier.out_proj = torch.nn.Linear(in_features=model.classifier.out_proj.in_features, out_features=num_classes, bias=True)loss_function = torch.nn.CrossEntropyLoss()optimizer = Adam(model.parameters(), lr=5e-4)metric = evaluate.load("accuracy")@accelerator.on_local_main_processdef print_something(something):print(something)EPOCHS = 1# device = torch.device("cuda" if torch.cuda.is_available() else "cpu")device = accelerator.device# model.to(device)model, optimizer, dataloader["train"], dataloader["validation"] = accelerator.prepare(model, optimizer, dataloader["train"], dataloader["validation"])for i in range(EPOCHS):model.train()progress_bar_train = tqdm.tqdm(dataloader["train"], disable=not accelerator.is_local_main_process)for batch in progress_bar_train:optimizer.zero_grad()input_ids = batch["input_ids"]#.to(device)attention_mask = batch["attention_mask"]#.to(device)labels = batch["label"]#.to(device)outputs = model(input_ids=input_ids, attention_mask=attention_mask)loss = loss_function(outputs['logits'], labels)# loss.backward()accelerator.backward(loss)optimizer.step()model.eval()progress_bar_validation = tqdm.tqdm(dataloader["validation"], disable=not accelerator.is_local_main_process)for batch in progress_bar_validation:input_ids = batch["input_ids"]#.to(device)attention_mask = batch["attention_mask"]#.to(device)labels = batch["label"]#.to(device)with torch.no_grad():outputs = model(input_ids=input_ids, attention_mask=attention_mask)predictions = torch.argmax(outputs['logits'], axis=-1)# Recopilamos las predicciones de todos los dispositivospredictions = accelerator.gather_for_metrics(predictions)labels = accelerator.gather_for_metrics(labels)accuracy = metric.add_batch(predictions=predictions, references=labels)accuracy = metric.compute()# Guardamos los pesosaccelerator.save_state("accelerate_scripts/checkpoints")print_something(f"Accuracy = {accuracy['accuracy']}")# Cargamos los pesosaccelerator.load_state("accelerate_scripts/checkpoints")Copied

Overwriting accelerate_scripts/09_accelerate_save_and_load_checkpoints.py

O executamos

!accelerate launch accelerate_scripts/10_accelerate_save_and_load_checkpoints.pyCopied

100%|█████████████████████████████████████████| 176/176 [01:58<00:00, 1.48it/s]100%|███████████████████████████████████████████| 20/20 [00:05<00:00, 3.40it/s]Accuracy = 0.2142

Salvar o modelo

Quando o método prepare foi usado, o modelo foi embrulhado para poder ser salvo nos dispositivos necessários. Portanto, na hora de salvá-lo, temos que usar o método save_model, que primeiro o desembrulha e depois o salva. Além disso, se usarmos o parâmetro safe_serialization=True, o modelo será salvo como um safe tensor.

%%writefile accelerate_scripts/11_accelerate_save_model.pyimport torchfrom torch.utils.data import DataLoaderfrom torch.optim import Adamfrom datasets import load_datasetfrom transformers import AutoTokenizer, AutoModelForSequenceClassificationimport evaluateimport tqdm# Importamos e inicializamos Acceleratorfrom accelerate import Acceleratoraccelerator = Accelerator()dataset = load_dataset("tweet_eval", "emoji")num_classes = len(dataset["train"].info.features["label"].names)max_len = 130checkpoints = "cardiffnlp/twitter-roberta-base-irony"tokenizer = AutoTokenizer.from_pretrained(checkpoints)def tokenize_function(dataset):return tokenizer(dataset["text"], max_length=max_len, padding="max_length", truncation=True, return_tensors="pt")tokenized_dataset = {"train": dataset["train"].map(tokenize_function, batched=True, remove_columns=["text"]),"validation": dataset["validation"].map(tokenize_function, batched=True, remove_columns=["text"]),"test": dataset["test"].map(tokenize_function, batched=True, remove_columns=["text"]),}tokenized_dataset["train"].set_format(type="torch", columns=['input_ids', 'attention_mask', 'label'])tokenized_dataset["validation"].set_format(type="torch", columns=['label', 'input_ids', 'attention_mask'])tokenized_dataset["test"].set_format(type="torch", columns=['label', 'input_ids', 'attention_mask'])BS = 128dataloader = {"train": DataLoader(tokenized_dataset["train"], batch_size=BS, shuffle=True),"validation": DataLoader(tokenized_dataset["validation"], batch_size=BS, shuffle=True),"test": DataLoader(tokenized_dataset["test"], batch_size=BS, shuffle=True),}model = AutoModelForSequenceClassification.from_pretrained(checkpoints)model.classifier.out_proj = torch.nn.Linear(in_features=model.classifier.out_proj.in_features, out_features=num_classes, bias=True)loss_function = torch.nn.CrossEntropyLoss()optimizer = Adam(model.parameters(), lr=5e-4)metric = evaluate.load("accuracy")@accelerator.on_local_main_processdef print_something(something):print(something)EPOCHS = 1# device = torch.device("cuda" if torch.cuda.is_available() else "cpu")device = accelerator.device# model.to(device)model, optimizer, dataloader["train"], dataloader["validation"] = accelerator.prepare(model, optimizer, dataloader["train"], dataloader["validation"])for i in range(EPOCHS):model.train()progress_bar_train = tqdm.tqdm(dataloader["train"], disable=not accelerator.is_local_main_process)for batch in progress_bar_train:optimizer.zero_grad()input_ids = batch["input_ids"]#.to(device)attention_mask = batch["attention_mask"]#.to(device)labels = batch["label"]#.to(device)outputs = model(input_ids=input_ids, attention_mask=attention_mask)loss = loss_function(outputs['logits'], labels)# loss.backward()accelerator.backward(loss)optimizer.step()model.eval()progress_bar_validation = tqdm.tqdm(dataloader["validation"], disable=not accelerator.is_local_main_process)for batch in progress_bar_validation:input_ids = batch["input_ids"]#.to(device)attention_mask = batch["attention_mask"]#.to(device)labels = batch["label"]#.to(device)with torch.no_grad():outputs = model(input_ids=input_ids, attention_mask=attention_mask)predictions = torch.argmax(outputs['logits'], axis=-1)# Recopilamos las predicciones de todos los dispositivospredictions = accelerator.gather_for_metrics(predictions)labels = accelerator.gather_for_metrics(labels)accuracy = metric.add_batch(predictions=predictions, references=labels)accuracy = metric.compute()# Guardamos el modeloaccelerator.wait_for_everyone()accelerator.save_model(model, "accelerate_scripts/model", safe_serialization=True)print_something(f"Accuracy = {accuracy['accuracy']}")Copied

Writing accelerate_scripts/11_accelerate_save_model.py

O executamos

!accelerate launch accelerate_scripts/11_accelerate_save_model.pyCopied

100%|█████████████████████████████████████████| 176/176 [01:58<00:00, 1.48it/s]100%|███████████████████████████████████████████| 20/20 [00:05<00:00, 3.35it/s]Accuracy = 0.214

Salvar o modelo pretrained

Em modelos que usam a biblioteca transformers devemos salvar o modelo com o método save_pretrained para poder carregá-lo com o método from_pretrained. Antes de salvá-lo, é necessário desembrulhá-lo com o método unwrap_model.

%%writefile accelerate_scripts/12_accelerate_save_pretrained.pyimport torchfrom torch.utils.data import DataLoaderfrom torch.optim import Adamfrom datasets import load_datasetfrom transformers import AutoTokenizer, AutoModelForSequenceClassificationimport evaluateimport tqdm# Importamos e inicializamos Acceleratorfrom accelerate import Acceleratoraccelerator = Accelerator()dataset = load_dataset("tweet_eval", "emoji")num_classes = len(dataset["train"].info.features["label"].names)max_len = 130checkpoints = "cardiffnlp/twitter-roberta-base-irony"tokenizer = AutoTokenizer.from_pretrained(checkpoints)def tokenize_function(dataset):return tokenizer(dataset["text"], max_length=max_len, padding="max_length", truncation=True, return_tensors="pt")tokenized_dataset = {"train": dataset["train"].map(tokenize_function, batched=True, remove_columns=["text"]),"validation": dataset["validation"].map(tokenize_function, batched=True, remove_columns=["text"]),"test": dataset["test"].map(tokenize_function, batched=True, remove_columns=["text"]),}tokenized_dataset["train"].set_format(type="torch", columns=['input_ids', 'attention_mask', 'label'])tokenized_dataset["validation"].set_format(type="torch", columns=['label', 'input_ids', 'attention_mask'])tokenized_dataset["test"].set_format(type="torch", columns=['label', 'input_ids', 'attention_mask'])BS = 128dataloader = {"train": DataLoader(tokenized_dataset["train"], batch_size=BS, shuffle=True),"validation": DataLoader(tokenized_dataset["validation"], batch_size=BS, shuffle=True),"test": DataLoader(tokenized_dataset["test"], batch_size=BS, shuffle=True),}model = AutoModelForSequenceClassification.from_pretrained(checkpoints)model.classifier.out_proj = torch.nn.Linear(in_features=model.classifier.out_proj.in_features, out_features=num_classes, bias=True)loss_function = torch.nn.CrossEntropyLoss()optimizer = Adam(model.parameters(), lr=5e-4)metric = evaluate.load("accuracy")@accelerator.on_local_main_processdef print_something(something):print(something)EPOCHS = 1# device = torch.device("cuda" if torch.cuda.is_available() else "cpu")device = accelerator.device# model.to(device)model, optimizer, dataloader["train"], dataloader["validation"] = accelerator.prepare(model, optimizer, dataloader["train"], dataloader["validation"])for i in range(EPOCHS):model.train()progress_bar_train = tqdm.tqdm(dataloader["train"], disable=not accelerator.is_local_main_process)for batch in progress_bar_train:optimizer.zero_grad()input_ids = batch["input_ids"]#.to(device)attention_mask = batch["attention_mask"]#.to(device)labels = batch["label"]#.to(device)outputs = model(input_ids=input_ids, attention_mask=attention_mask)loss = loss_function(outputs['logits'], labels)# loss.backward()accelerator.backward(loss)optimizer.step()model.eval()progress_bar_validation = tqdm.tqdm(dataloader["validation"], disable=not accelerator.is_local_main_process)for batch in progress_bar_validation:input_ids = batch["input_ids"]#.to(device)attention_mask = batch["attention_mask"]#.to(device)labels = batch["label"]#.to(device)with torch.no_grad():outputs = model(input_ids=input_ids, attention_mask=attention_mask)predictions = torch.argmax(outputs['logits'], axis=-1)# Recopilamos las predicciones de todos los dispositivospredictions = accelerator.gather_for_metrics(predictions)labels = accelerator.gather_for_metrics(labels)accuracy = metric.add_batch(predictions=predictions, references=labels)accuracy = metric.compute()# Guardamos el modelo pretrainedunwrapped_model = accelerator.unwrap_model(model)unwrapped_model.save_pretrained("accelerate_scripts/model_pretrained",is_main_process=accelerator.is_main_process,save_function=accelerator.save,)print_something(f"Accuracy = {accuracy['accuracy']}")Copied

Writing accelerate_scripts/11_accelerate_save_pretrained.py

O executamos

!accelerate launch accelerate_scripts/12_accelerate_save_pretrained.pyCopied