MCP: Complete Guide to Create servers and clients MCP (Model Context Protocol) with FastMCP

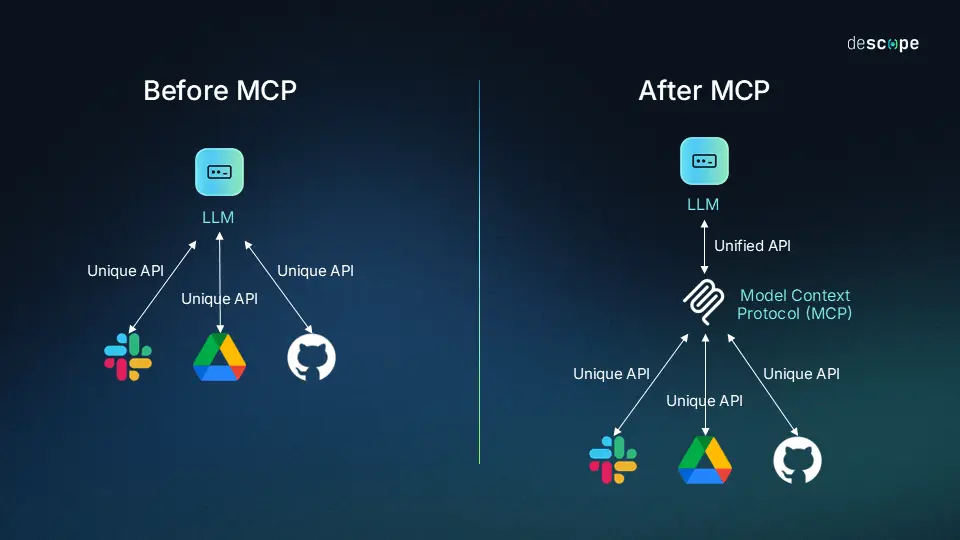

Learn what is the Model Context Protocol (MCP), the open-source standard developed by Anthropic that revolutionizes how AI models interact with external tools. In this practical and detailed guide, I take you step by step in creating an MCP server and client from scratch using the fastmcp library. You will build an "intelligent" AI agent with Claude Sonnet, capable of interacting with the GitHub API to query issues and repository information. We will cover from basic concepts to advanced features like filtering tools by tags, server composition, static resources and dynamic templates (resource templates), prompt generation, and secure authentication. Discover how MCP can standardize and simplify the integration of tools in your AI applications, analogously to how USB unified peripherals!