Disclaimer: This post has been translated to English using a machine translation model. Please, let me know if you find any mistakes.

Introduction

Blip2 is an artificial intelligence capable of taking an image or video as input and having a conversation, answering questions, or providing context about what the input shows with great accuracy 🤯

Installation

To install this tool, it's best to create a new Anaconda environment.

!$ conda create -n blip2 python=3.9Copied

Now we dive into the environment

!$ conda activate blip2Copied

We install all the necessary modules

!$ conda install pytorch torchvision torchaudio pytorch-cuda=11.7 -c pytorch -c nvidiaCopied

!$ conda install -c anaconda pillowCopied

!$ conda install -y -c anaconda requestsCopied

!$ conda install -y -c anaconda jupyterCopied

Finally we install Blip2

!$ pip install salesforce-lavisCopied

Usage

We load the necessary libraries

import torchfrom PIL import Imageimport requestsfrom lavis.models import load_model_and_preprocessCopied

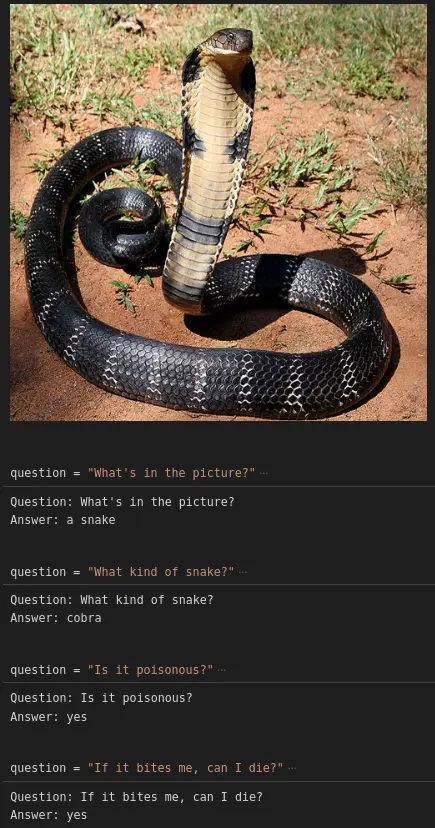

We load an example image

img_url = 'https://upload.wikimedia.org/wikipedia/commons/thumb/4/4d/12_-_The_Mystical_King_Cobra_and_Coffee_Forests.jpg/800px-12_-_The_Mystical_King_Cobra_and_Coffee_Forests.jpg'raw_image = Image.open(requests.get(img_url, stream=True).raw).convert('RGB')display(raw_image.resize((500, 500)))Copied

<PIL.Image.Image image mode=RGB size=500x500>

We set the GPU if there is one

device = torch.device("cuda" if torch.cuda.is_available() else 'cpu')deviceCopied

device(type='cuda')

We assign a model. In my case, with a computer that has 32 GB of RAM and a 3060 GPU with 12 GB of VRAM, I can't use all of them, so I've added a comment ok next to the models I was able to use, and the error I received for those I couldn't. If you have a computer with the same amount of RAM and VRAM, you'll know which ones you can use; if not, you'll need to test them.

# name = "blip2_opt"; model_type = "pretrain_opt2.7b" # ok# name = "blip2_opt"; model_type = "caption_coco_opt2.7b" # FAIL VRAM# name = "blip2_opt"; model_type = "pretrain_opt6.7b" # FAIL RAM# name = "blip2_opt"; model_type = "caption_coco_opt6.7b" # FAIL RAM# name = "blip2"; model_type = "pretrain" # FAIL type error# name = "blip2"; model_type = "coco" # okname = "blip2_t5"; model_type = "pretrain_flant5xl" # ok# name = "blip2_t5"; model_type = "caption_coco_flant5xl" # FAIL VRAM# name = "blip2_t5"; model_type = "pretrain_flant5xxl" # FAILmodel, vis_processors, _ = load_model_and_preprocess(name=name, model_type=model_type, is_eval=True, device=device)vis_processors.keys()Copied

Loading checkpoint shards: 0%| | 0/2 [00:00<?, ?it/s]

dict_keys(['train', 'eval'])

We prepare the image to feed it into the model

image = vis_processors["eval"](raw_image).unsqueeze(0).to(device)Copied

We analyze the image without asking anything

model.generate({"image": image})Copied

['a black and white snake']

We analyze the image by asking

prompt = NoneCopied

def prepare_prompt(prompt, question):if prompt is None:prompt = question + " Answer:"else:prompt = prompt + " " + question + " Answer:"return promptCopied

def get_answer(prompt, question, model):prompt = prepare_prompt(prompt, question)answer = model.generate({"image": image,"prompt": prompt})answer = answer[0]prompt = prompt + " " + answer + "."return prompt, answerCopied

question = "What's in the picture?"prompt, answer = get_answer(prompt, question, model)print(f"Question: {question}")print(f"Answer: {answer}")Copied

Question: What's in the picture?Answer: a snake

question = "What kind of snake?"prompt, answer = get_answer(prompt, question, model)print(f"Question: {question}")print(f"Answer: {answer}")Copied

Question: What kind of snake?Answer: cobra

question = "Is it poisonous?"prompt, answer = get_answer(prompt, question, model)print(f"Question: {question}")print(f"Answer: {answer}")Copied

Question: Is it poisonous?Answer: yes

question = "If it bites me, can I die?"prompt, answer = get_answer(prompt, question, model)print(f"Question: {question}")print(f"Answer: {answer}")Copied

Question: If it bites me, can I die?Answer: yes